HR Glossary

BARS – Behaviourally Anchored Rating Scale

Managing engineering teams in 2025 requires more than gut feelings about who’s performing well. With remote work reshaping how we evaluate collaboration skills and AI fundamentally changing what “good performance” looks like for developers, many tech leaders are struggling to make performance reviews objective, fair, and genuinely useful.

The reality in today’s tech landscape is that traditional performance appraisals often miss the mark entirely. Generic rating scales can’t capture the nuanced difference between a senior developer who writes elegant, maintainable code and one who ships features quickly but creates technical debt. This is where the behaviorally anchored rating scale becomes invaluable.

What is a Behaviorally Anchored Rating Scale?

A behaviorally anchored rating scale (BARS) is a performance evaluation method that measures job performance by comparing an employee’s behaviors against specific, observable examples tied to different performance levels. Unlike traditional rating scales that rely on subjective terms like “excellent” or “needs improvement,” BARS defines exactly what behaviors characterize each level of performance.

The BARS method was developed specifically to address the subjectivity problems that plague conventional performance appraisals. Instead of asking whether someone is a “good team player” on a scale of 1-5, a behaviorally anchored rating scale describes precisely what collaborative behavior looks like at each performance level—from ineffective to exceptional.

Here’s what most companies miss about performance management: the behaviors that drive success in tech roles are constantly evolving. A BARS approach forces organizations to continuously examine and define what excellent performance actually means in their specific context, making it particularly valuable for fast-moving technical teams.

How the BARS Method Works: The Critical Incident Technique

To develop and implement a behaviorally anchored rating scale, organizations typically use the critical incident technique (CIT). This job analysis method involves collecting real examples of effective and ineffective work behaviors from people who actually do the job—your engineers, product managers, and technical leads.

The critical incident technique works by asking subject matter experts to identify specific situations where someone’s behavior led to notably successful or unsuccessful outcomes. For a DevOps engineer, this might include incidents like:

- Successfully diagnosing and resolving a production outage within SLA requirements

- Implementing infrastructure automation that reduced deployment time by 60%

- Failing to document a critical system change, causing issues for the on-call team

- Proactively identifying and fixing a security vulnerability before it was exploited

These real incidents become the foundation for your performance dimensions. The power of using the critical incident technique is that it grounds your evaluation in actual job performance rather than theoretical ideals about what engineers should do.

Performance Levels in a Behaviorally Anchored Rating Scale

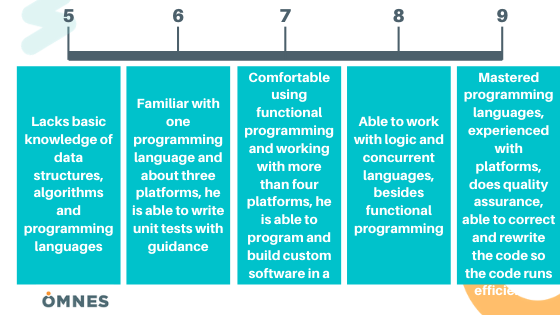

Most behaviorally anchored rating scales use five to nine performance levels. The key is that each level must be anchored to specific, observable behaviors rather than vague descriptors. Let’s break down what this looks like in practice for technical roles.

Five-Point Scale Structure

A typical BARS is a performance evaluation tool that uses five levels:

Level 5 – Exemplary Performance: Consistently exceeds expectations with behaviors that set new standards Level 4 – Above Expected Performance: Regularly demonstrates behaviors that exceed role requirements Level 3 – Fully Effective Performance: Consistently meets all behavioral expectations for the role Level 2 – Development Needed: Shows some expected behaviors but requires improvement in key areas Level 1 – Unsatisfactory Performance: Fails to demonstrate essential behaviors required for the role

The difference between a traditional scale and behaviorally anchored rating is in the details. Each of these levels needs specific behavioral examples that anyone in your organization can observe and verify.

Behaviorally Anchored Rating Scale Examples for Tech Roles

Let’s explore how to develop behavioral anchors for common technical positions. These examples demonstrate how BARS transforms abstract performance criteria into actionable, measurable standards.

Software Engineer: Code Quality and Technical Debt Management

Performance Dimension: Quality of code and attention to technical debt

Level 5: Writes highly maintainable code with comprehensive documentation; proactively refactors legacy systems; establishes coding standards that improve team efficiency; mentors others on clean code principles; consistently receives positive feedback during code reviews for elegant solutions.

Level 4: Produces clean, well-documented code that requires minimal revisions; regularly identifies and addresses technical debt during sprint planning; follows established patterns and best practices; provides helpful feedback in code reviews.

Level 3: Writes functional code that passes code review requirements; documents code according to team standards; addresses technical debt when assigned; participates in code reviews constructively.

Level 2: Submits code requiring multiple revision rounds; documentation is incomplete or unclear; rarely volunteers to address technical debt; provides minimal input during code reviews; sometimes introduces new bugs when fixing existing ones.

Level 1: Consistently delivers code with significant quality issues; ignores coding standards; creates technical debt without acknowledgment; defensive during code reviews; unable to explain design decisions.

DevOps Engineer: Incident Response and System Reliability

Performance Dimension: Response to production incidents and system stability

Level 5: Leads incident response with calm, systematic approach; implements monitoring that prevents future incidents; creates comprehensive postmortems with actionable improvements; mentors team members on troubleshooting techniques; maintains uptime exceeding SLA by significant margin.

Level 4: Responds quickly to incidents with effective triage; conducts thorough root cause analysis; implements preventive measures based on incident patterns; contributes meaningful insights to postmortems; consistently meets reliability targets.

Level 3: Follows incident response procedures appropriately; participates in root cause analysis; implements assigned remediation tasks; documents incidents according to team standards; maintains acceptable system reliability.

Level 2: Struggles to prioritize during incidents; incomplete root cause analysis; slow to implement fixes; minimal documentation; occasional missed SLA targets due to delayed response.

Level 1: Panics during incidents or escalates prematurely; fails to conduct proper analysis; does not follow established procedures; poor or absent documentation; frequent reliability issues in owned systems.

Engineering Manager: Team Development and Performance

Performance Dimension: Developing team members and fostering growth

Level 5: Creates individualized development plans aligned with both company needs and engineer career goals; provides regular, specific feedback that accelerates growth; successfully retains top performers while improving struggling team members; builds succession pipeline for critical roles; team members report high satisfaction and clear growth trajectory.

Level 4: Conducts meaningful one-on-ones with actionable feedback; identifies skill gaps and arranges appropriate training; supports career development conversations; team shows measurable improvement over time.

Level 3: Holds regular one-on-ones; provides feedback during performance reviews; approves requested training; discusses career goals at least quarterly; maintains stable team retention.

Level 2: Inconsistent one-on-ones; feedback is vague or generic; reactive rather than proactive about development; limited awareness of individual career aspirations; experiencing higher turnover than team average.

Level 1: Neglects one-on-ones or uses them only for status updates; fails to provide developmental feedback; does not support growth opportunities; unaware of team members’ career goals; high turnover with exit interviews citing lack of development.

The Role of Performance Appraisals in Modern Tech Organizations

Performance appraisals have evolved significantly from annual reviews focused purely on evaluation. With continuous feedback models and agile methodologies reshaping how tech teams work, the behaviorally anchored rating scale offers a framework that works alongside more frequent check-ins.

The current shift toward employee experience in tech means performance management needs to serve development, not just evaluation. A well-designed BARS accomplishes both by making expectations crystal clear while providing a roadmap for improvement.

What we’re seeing with leading tech companies is a hybrid approach: quarterly reviews using behaviorally anchored rating scales for formal assessment, combined with weekly or bi-weekly feedback conversations that reference those same behavioral standards. This creates consistency between informal coaching and formal evaluation.

Advantages of Behaviorally Anchored Rating Scales

When implemented correctly, BARS offers significant benefits for tech organizations:

1. Objectivity in Technical Assessments

The tech industry struggles with bias in performance reviews—studies show that women and underrepresented minorities receive vague feedback while white men get specific technical guidance. BARS combats this by requiring specific behavioral examples, making it harder for unconscious bias to influence ratings.

2. Clear Performance Expectations

For technical roles where the skills landscape shifts rapidly, BARS forces regular conversations about what “good” looks like. When your team adopts a new framework or transitions to microservices, you update the behavioral anchors to reflect new expectations.

3. Better Calibration Across Teams

In organizations with multiple engineering teams, BARS provides consistency. A “Level 4” senior engineer in the frontend team should demonstrate comparable capability to a “Level 4” in the backend team, even though specific behaviors differ. The structured approach enables more accurate calibration sessions.

4. Actionable Feedback for Development

Engineers often complain that performance reviews tell them they need to “improve communication” without explaining what that means. Behaviorally anchored rating shows exactly what improved communication looks like: “Provides context in pull requests that helps reviewers understand not just what changed but why” versus “Writes one-line PR descriptions.”

5. Support for Promotion Decisions

Tech companies face challenges justifying promotion decisions, especially in flat organizations. BARS creates documentation showing sustained demonstration of behaviors at the next level, making promotion conversations more objective and defensible.

6. Legal Protection

In industries with strict compliance requirements or during layoffs, having objective, behavior-based performance records provides important legal protection. BARS documentation shows that employment decisions were based on observable performance, not subjective opinions.

Disadvantages and Challenges of BARS

The behaviorally anchored rating scale isn’t without drawbacks. Let’s examine the challenges honestly:

1. Significant Development Investment

Creating BARS for technical roles requires substantial time from your most experienced engineers and managers. For a startup with 20 engineers across five role types, you’re looking at 40-60 hours of SME time plus HR facilitation. That’s expensive.

2. Maintenance Overhead

Tech moves fast. The behavioral anchors you develop for a cloud infrastructure engineer today might be partially obsolete in 18 months as new technologies emerge. Organizations must commit to regular reviews and updates.

3. Complexity for Managers

Evaluating someone against 8-10 performance dimensions with 5 behavioral examples each means managers are tracking 40-50 specific behaviors per direct report. Without proper tooling and training, this becomes overwhelming and defeats the purpose of clarity.

4. Risk of Checkbox Mentality

There’s a danger that BARS becomes a compliance exercise rather than a developmental tool. Managers might simply check boxes without meaningful conversation, or engineers might “perform” specific behaviors without understanding the underlying principles.

5. Difficulty Capturing Innovation

Some of the most valuable contributions in tech—like suggesting a architectural shift that prevents future problems—don’t fit neatly into predefined behavioral categories. BARS must leave room for recognizing unexpected excellence.

6. Not Suitable for All Organization Sizes

Startups with fewer than 30 employees and rapidly evolving roles often find BARS too rigid. The investment makes more sense for organizations with established role definitions and multiple people in similar positions.

How to Develop a Behaviorally Anchored Rating Scale for Your Tech Organization

Based on our experience with similar roles, here’s a practical framework for creating effective BARS:

Phase 1: Preparation (Months 1-2)

Build Executive Buy-In Start by explaining to your leadership team why traditional performance management isn’t working. Use data: turnover rates, exit interview feedback citing unclear expectations, promotion disputes, or bias in performance reviews. Make the business case for investment.

Form Your Governance Team Include representatives from engineering leadership, HR, and critically, experienced individual contributors who understand what excellent performance actually looks like. Marketing can help with internal communication strategy.

Identify Priority Roles Don’t try to build BARS for every role simultaneously. Start with your highest-impact positions: senior engineers, engineering managers, or roles where you’re experiencing performance management challenges.

Phase 2: Behavioral Identification (Months 2-4)

Assemble SME Teams For each role, recruit 5-8 subject matter experts including:

- Hiring managers for those positions

- Senior practitioners currently in the role

- Team members who work closely with that role

- HR partners who understand job requirements

Conduct Critical Incident Workshops Ask your SMEs to identify 10-15 critical incidents—specific situations where someone’s behavior significantly impacted outcomes (positively or negatively). For a senior backend engineer, this might include:

- How they approached a complex database migration

- Their response when a junior engineer introduced a bug

- How they handled competing priorities during a critical sprint

- Their behavior during an architecture decision meeting

Document Behavioral Statements Transform each incident into a behavioral statement focused on observable actions. Instead of “good judgment,” write “evaluated three database solutions against our scale requirements and presented trade-offs to the team with specific performance benchmarks.”

Phase 3: Scale Development (Months 4-6)

Create Performance Dimensions Group related behaviors into dimensions. For technical roles, common dimensions include:

- Technical skill and code quality

- Problem-solving and system design

- Collaboration and code review practices

- Knowledge sharing and documentation

- Initiative and ownership

- Response to feedback and continuous learning

Develop Behavioral Anchors for Each Level Your second SME team (different people from the first to ensure objectivity) reviews the behavioral statements and assigns them to performance levels. This retranslation process validates that behaviors truly represent different performance levels.

Fill Gaps in the Scale You’ll likely have behaviors for levels 1, 3, and 5 but need to develop statements for levels 2 and 4. Your SME team creates these intermediate examples based on their experience.

Phase 4: Validation and Refinement (Months 6-8)

Test with Actual Performance Data If you have recent performance examples, test whether your BARS would have accurately captured those situations. Ask managers: “Does this level 4 description match what Sarah demonstrated during the microservices migration?”

Refine for Clarity Edit behavioral statements for precision. “Communicates well” becomes “Provides weekly technical updates that non-engineers understand, using diagrams and examples rather than jargon.”

Calibrate Across Teams Have managers from different teams evaluate the same hypothetical scenarios using your BARS. If they arrive at different ratings, discuss why and refine the behavioral anchors for consistency.

Phase 5: Implementation (Months 8-10)

Train Managers Thoroughly Don’t just distribute the BARS document. Run workshops where managers practice rating sample behaviors, discuss edge cases, and learn to give feedback tied to specific behavioral anchors.

Communicate with Employees Launch BARS with transparent communication about what’s changing and why. Share the complete behavioral anchors with engineers so they understand exactly what’s expected.

Start with Development Focus Before using BARS for high-stakes decisions, run one cycle focused purely on development. This builds familiarity and trust before introducing evaluative stakes.

Best Practices for BARS in Tech Organizations

Let’s rethink how you approach implementation to maximize success:

Integrate with Continuous Feedback

BARS shouldn’t be a once-a-year surprise. Reference specific behavioral anchors during code reviews, one-on-ones, and sprint retrospectives. When someone demonstrates level 5 behavior, point it out specifically: “The way you architected this solution with future extensibility in mind—that’s exactly the systems thinking we described in the level 5 anchor.”

Make It a Two-Way Conversation

Engineers should self-assess using the same BARS before their review. The conversation becomes about alignment or discrepancy between self-perception and manager observation, with behavioral examples as evidence.

Update Regularly

Schedule annual reviews of your BARS with your SME team. Are the behaviors still relevant? Have new expectations emerged? Is AI changing what we need from our engineers? Treat BARS as a living document.

Use Technology Wisely

Performance management platforms can help managers track behavioral observations throughout the review period rather than relying on recency bias. But don’t let the tool drive the process—keep the focus on genuine behavioral examples.

Complement with Other Data

BARS captures what you can observe in behavior, but pair it with other signals: code metrics, peer feedback, project outcomes, and 360 reviews. No single evaluation method should stand alone.

BARS for IT Recruiters and Talent Acquisition

The behaviorally anchored rating scale offers unique advantages for technical recruiting:

Structured Interview Evaluation

When you develop behavioral anchors for a role, you’ve essentially created your structured interview rubric. Instead of asking candidates generic questions, you can design interview scenarios that reveal whether they demonstrate level 3, 4, or 5 behaviors.

For a senior engineering role, you might present a scenario: “You’re reviewing a pull request that solves the immediate problem but introduces technical debt. The engineer is junior and defensive about feedback. How do you handle this?”

Their response reveals collaboration skills, technical judgment, and mentoring ability—all tied to your BARS performance dimensions.

Candidate Assessment Consistency

When multiple interviewers evaluate candidates using the same behavioral anchors, hiring decisions become more objective. You can calibrate after interviews by comparing notes on which performance levels the candidate demonstrated.

Realistic Job Previews

Sharing behavioral anchors during interviews gives candidates a clear picture of expectations. This transparency helps them self-select out if the role isn’t right, improving retention of new hires.

The Future of Performance Management in Tech

Given the current market shift toward AI integration in engineering workflows, BARS will need to evolve. We’re already seeing organizations add performance dimensions around:

- Effective use of AI coding assistants while maintaining code quality

- Ability to validate and explain AI-generated solutions

- Judgment about when to use AI tools versus manual implementation

- Prompt engineering skills for technical documentation

The behaviorally anchored rating scale framework adapts well to these changes because it forces explicit conversations about evolving expectations rather than assuming everyone shares the same implicit understanding.

Should Your Tech Organization Implement BARS?

The behaviourally anchored rating scale works best for organizations that:

- Have at least 50-100 employees with defined role categories

- Struggle with inconsistent performance evaluation across teams

- Need to justify promotion and compensation decisions objectively

- Have resources to invest in development and maintenance

- Value clear expectations and developmental feedback

- Face compliance requirements or legal scrutiny around performance management

BARS is probably not right if you:

- Are a startup with fewer than 30 people and rapidly evolving roles

- Have a strong continuous feedback culture with minimal need for formal reviews

- Lack time for proper implementation (half-hearted BARS is worse than none)

- Have extremely unique roles with no performance patterns to anchor to

Conclusion: Making Performance Management Work for Your Tech Teams

The reality of managing technical teams in 2025 is that performance expectations are more complex than ever. The behaviorally anchored rating scale offers a framework for clarity, objectivity, and development—but only when implemented thoughtfully.

The key to success is treating BARS not as an HR compliance tool but as a collaborative effort to define and develop excellence in your organization. When engineers understand exactly what behaviors distinguish a level 3 from a level 4 performer, they have a roadmap for growth. When managers have specific behavioral examples to reference, performance conversations become constructive rather than contentious.

If you’re considering BARS for your tech organization, start small. Pilot with one or two critical roles, invest in proper development using the critical incident technique, and commit to maintaining it as your organization evolves. Done well, a behaviorally anchored rating scale transforms performance management from a dreaded annual ritual into a genuine driver of individual and organizational growth.

Let’s discuss whether BARS makes sense for your specific technical hiring challenges and organizational context. The right performance management approach depends on your team structure, growth stage, and strategic priorities—and there’s no one-size-fits-all solution.